Blog

House of Mind - Fastbin Variant Revived

3/22/2021

Introduction

Have you ever discovered something amazing only to later realize that somebody else had already beaten you to it? In this article, I wanted to describe a new (rediscovered) GLibC heap exploitation method that works on all versions of the allocator. With this method, an attacker can write a heap value to an arbitrary location (WRITE-WHERE primitive).

When I started looking for new ways to exploit the allocator via the non-main arena chunks, I thought I had found a new technique! However, part way through writing this article, I reread the original post (Malloc Malefaricum) on the House of Mind only to realize that there is a fastbin variant of the attack as well. The original use case for the fastbin variant of the House of Mind writes a heap pointer to a GOT address to jump to shellcode. But, this technique to trivially pop a shell is no longer viable because of NX. With the original House of Mind being patched and the fastbin variant no longer being an auto shell, this technique was mostly forgotten about, waiting to be rediscovered for a new purpose. So, new or not, nobody seems to be talking about this technique. In this article I wanted to shed light onto the House of Mind's Fastbin Variant as a useful WRITE-WHERE primitive and discuss when it can be used.

This post will (hopefully) have a POC in how2heap; the link is currently to a pull request with all of the code. For more security related content, check out this blog for other things, such as the House of Muney heap exploitation technique.

Before we get to the meat of the technique, we will go over how the heap allocator works. I cannot stress enough how important understanding the allocator is for heap exploitation. Trying to pwn the heap without understanding the inner workings of the allocator is like trying to shot a fish in a lake.

Chunks

Chunks are the main object that users interact with. There are two main states to a chunk: allocated and free. The following picture demonstrates a free chunk:

On an allocated (non-free) chunk, the third and fourth field are used as data sections. However, on a free chunk, the third field is used in order to store a pointer to other freed chunks. This field is known as the forward pointer or the Fd field. The fourth field is exactly the same, except that it stores a backwards pointer or bk.

Bins

When using Malloc, chunks that are freed are put into a local storage to be reused. The storage locations for the free chunks are called bins.

There are a multiple kinds of bins for different sizes and situations. All bins use a linked list data structure in order to keep track of the free chunks in the bin. For the purposes of this technique, only a single bin needs to be understood: fastbins.

Fastbin

Prior to version 2.26 (current at 2.33), the fastbin was the first location where chunks were put into, if the size matched up. Fastbin chunks are very small chunks with the idea that small chunk sizes are going to be used frequently. On 64-bit systems (assumed for the rest of the article) the bins range from chunk sizes of 0x20-0x80 bytes in 0x10 byte intervals.

Fastbins use a singly linked list, while several of the other bins use doubly linked lists. This means that free chunks only have a single pointer to the chunk in front of it ('fd' field in figure 1). Because of this, validation of fastbin pointers is difficult to do.

Only fastbins are necessary for understanding how this technique works. However, this article only scratches the surface of how the allocator works, in particular, bins and chunks. If you want to learn more about the allocator, Azeria Labs and Sploitfun have awesome articles on this.

Arenas

Arenas are a struct that is shared between threads which contains references to heaps, as well as the bins (where the free chunks are stored at) and other state information about a heap. Threads assigned to each arena will allocate memory from that arena's free lists. In layman's terms, the arena is the metadata information that makes the allocator work. All of this state information is stored in the malloc_state struct.

A single threaded process has a single arena called the main arena. When threads become involved, multiple arenas are used in order to allow for concurrent heap data access, as heap data is specific to a process. These other arenas are called non-main arenas. So, this begs the question: "How does the allocator know which arena a chunk belongs to when being freed?" Excellent question!

Non-Main Arena Bit

First, we need to learn about the third bit of the size field (labeled as the 'N' bit in figure 1 above). This is used in order to specify if this chunk belongs in a non-main arena or not. If this bit is set, then the chunk belongs to a non-main arena and if the bit is NOT set, the chunk belongs to the main arena. This bit is set at allocation time, prior to the chunk being passed back to the user.

Finding the Arena

#define arena_for_chunk(ptr) \

chunk_non_main_arena (ptr) ? \

heap_for_ptr (ptr)->ar_ptr : \

&main_arena

The else (second part of the conditional from the ternary operator at line #4 above) is easier to understand, so we will start there. If the chunk is in the main arena (or, not a non-main arena chunk) then return a pointer to the main arena global variable. This is all that is done to find the main arena.

The first part of the conditional (line #3 above) is for a non-main arena chunk, which is specified by the non-main arena bit flag on the size discussed earlier. If this is the case, it calls another macro called heap_for_ptr, which is where most of the magic happens at. This macro is shown below:

#define heap_for_ptr(ptr) \

((heap_info *) \

((unsigned long) (ptr) & \

~(HEAP_MAX_SIZE - 1))) \

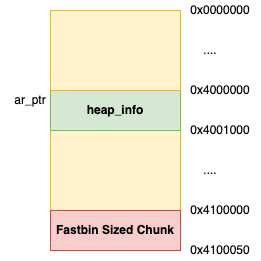

The heap_for_ptr macro takes the chunk being freed and removes a large portion of the least significant bits. This is done by ANDing the value with a large quantity of 0s. But why? Here's the punchline! The bottom of a non-main arena heap has a pointer to our arena! To be precise, this is the heap_info struct; the first field (ar_ptr) has the pointer to the arena. Notice the (ptr)->ar_ptr code being used in the arena_for_chunk macro above to get the arena from the heap_info struct.

This is quite clever! The user will always have a pointer to the malloc chunk (the chunk is being freed). So, by storing the arena at the bottom of the heap itself, we can just remove the least significant bits of the chunk to find the arena! If this does not make sense, I completely understand. Figure 2 has a visual representation of this that should help.

To wrap this up, here are the important notes:

- The

arena_for_chunkmacro gets the arena by using the chunk itself. - The non-main arena bit specifies which functionality to use for getting an arena.

- The main arena is stored in a global variable and the non-main arena does some clever math on the chunk in order to get the arena.

Flow of the Attack

The attack writes the pointer of a chunk being freed to a nearly arbitrary location; this is known as a WRITE-WHERE primitive. In the land of GLibC Heap exploitation, the Unsorted Bin Attack and the Large Bin Attack are good reference points for similar WRITE-WHERE primitives.

For the attack itself, it can be broken up into several stages:

- Heap Feng Shui (aligning controllable data with

heap_infostruct) - Creating a fake

heap_infoand arena - Corrupting a fastbin sized chunk

- Trigger the write via freeing the altered chunk

Heap Feng Shui

As in the original House of Mind, our main goal is to create a fake arena to be abused. In order to get the arena, we first need to create a fake heap_info struct. Where is this located at?

From looking at the macro heap_for_ptr (shown above) the pointer is found by removing a large portion of the least significant bits of the chunk. For instance, a heap pointer like 0x555558a4340 would be transformed into 0x55555000000 to find the heap_info struct. An example of this can be seen in Figure 2 above. So, to create a fake heap_info struct and set the pointer for the arena properly, we will need to setup the pointer at the EXACT right spot in memory. How is this done?

Alignment of Allocations

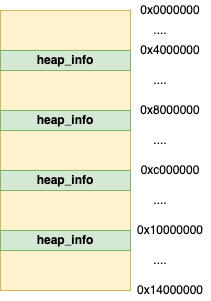

By allocating a large amount of chunks from the heap (ensure the size of each chunk smaller than the mmap_threshold), we can control a section in memory that can be used for a fake heap_info struct!

All we have to do is manipulate the heap so that the

attacker controls the area of memory that the corrupted chunk is aligned down to at intervals of HEAP_MAX_SIZE when calling arena_for_chunk; this can be seen in figure 3.

The HEAP_MAX_SIZE value varies depending on many settings. From empirical testing on a Ubuntu 16.04 64-bit machine, the size is 4MB. Because the main_arena is not aligned to values of HEAP_MAX_SIZE, this will require an average of '0x2000000' bytes of memory being allocated but no more than '0x4000000' bytes. In practice, this alignment is necessary because chunks at lower areas of the heap align down to an area of memory that is neither attack controlled nor mapped at all.

Once we have a chunk at the proper location lined up, we need to setup two fake structures for this to work. The alignment is just the first piece in the puzzle.

Setting Up Fake Heap_info

typedef struct _heap_info

{

malloc_state ar_ptr; // Arena

...

}

Once we have control of the aligned memory necessary for a heap_info struct, we need to prepare a fake one. Luckily for us, only a single field needs to be faked: ar_ptr. This field, which is the first entry of the heap_info struct, is the arena pointer. So, at a MAX_HEAP_SIZE aligned value, we need to specify the location (pointer) of the arena, which seems easy enough. Where do we put this at for maximum damage though? This is where things start to come together!

Fake Arena

Fake Arena Location

From here, we will work backwards from the WRITE to the get to the proper location of the fake arena. The WRITE takes place here when writing a fastbin pointer into the arenas free list. If we want to overwrite somewhere with our heap chunk, we have to consider two items: arena location and the fastbin size.

Because the fake arena is controlled directly by us and can be set to an arbitrary location; this is the most important value to consider. Recall, this is set in the fake heap_info->ar_ptr struct discussed above. If we want to overwrite a specific location in memory with our heap chunk, we must put the arena close to the target memory address.

Arena Writing Offset

struct malloc_state

{

mutex_t mutex;

int flags; // Formly max_fast

mfastbinptr fastbinsY[NFASTBINS]; // Fastbins

...

}

Besides the location of the arena, the writing of the chunk to a fastbin adds an offset to the WRITE. The fastbinsY array (shown above in the malloc_state struct) is an array of pointers that makes up the fastbins data storage; the fastbinsY array is the third entry in the malloc_state struct. This causes an offset of all of the struct fields that are ahead of the fastbinsY. For instance, in 2.23 (shown above), the offset is only 0x8 because of the 4 byte mutex and the 4 byte flags field. In 2.27+, the additional field have_fastchunks makes the offset 16 bytes until the fastbinsY array.

Finally, we can deal with the fastbins offset itself. When the chunk is written into the fastbinsY array, the index is based upon the chunk size. Different chunk sizes are written to different locations of the array, as each chunk size has its own bin. For instance, the smallest size 0x20 is written into the fastbinsY[0] and the largest size 0x80 is written into fastbinsY[6].

When choosing the location of the arena, the offset (to overwrite the proper memory location) must be carefully chosen based upon the size of the chunk being freed and the version of malloc.

Numerical Example

Let's assume our target is the address 0x5555554444 for GLibC 2.23 and we are writing a chunk of size 0x30. In order to write to the proper location, we would need to subtract the offset to fastbinsY (8 bytes) array. Additionally, we need to account for the index of the fastbins chunk being written. A size of 0x30 is the 2nd entry in the fastbins, which results in 8 more bytes needing to be subtracted. This ends up being 0x5555554444-8-8 = 0x5555554434 for the arena location to use. Now, when we write the chunk to the fastbins, the 8+8 will be added back into the address to write to our actual target 0x5555554444.

At first, the subtraction on the location of the arena does not seem intuitive. However, let's do this improperly with the same problem from above. If we choose the arena to be 0x5555554444 (our target) then the added offset will actually write to 0x5555554454 because the write occurs at arena + 0x10. Sometimes, I wish I would have paid more attention to math as a 3rd grader!

Fixing the Size Sanity Check

if next_chunk_size <= 0x10 or

next_chunk_size >= arena->system_mem

Crash

Using the fastbin variant of the House of Mind on recent versions of LibC only one security check needs to be bypassed. The code (pseudocode shown above) is validating that the next chunks size is not larger than the system allocation and the next chunks size is not smaller than the smallest possible chunk (0x10). In layman's terms, check if the next chunk size is crazy small or crazy large. If the above conditional is true, then the program will abort.

For the smaller end, the size of the next chunk needs to be greater than or equal to 0x10. This should be trivial to bypass because there is already a chunk above this one! So, you can just set the size of the fake chunk to line up with the original size of the chunk for the bypass. If this is not possible (for offset reasons), then this value could be written to a controllable offset.

The validation on the larger side is a little more complicated though. Because we have created the arena, the value of the arenas system_mem needs to be set. system_mem needs to be larger than the next chunk size (which has a minimum of 0x10). The system_mem value is at an offset of 0x880 on 2.23 and 2.29 is at 0x888 for the malloc_state struct. The offset depends on the version of GLibC's malloc_state struct but can easily be found while using GDB.

Once you find an alignment for the arena that has a proper value at the offset (or you write it yourself), the sanity check has been bypassed! With this check bypassed, the chunk will be put into the arenas fastbin to overwrite the memory address when the chunk is freed.

Corrupting a Fastbin Chunk

- Use the correct chunk size. This comes down to three things:

- Setting up a size of the chunk being freed for the proper offset on the WHERE place to write. Naturally, this has to be a fastbin sized chunk.

- Setting the chunk size to align with a valid

next_chunksize. - Set the non-main arena bit (bit #3 as shown in figure 1) for a chunk.

- The chunk MUST align down to the

HEAP_MAX_SIZEfor our fake arena to be used when the chunk is freed. - Use the heap value being written in a smart way.

Where to Write?

This is a WRITE-WHERE primitive. An attacker fully controls the location (memory address) being written to but NOT the value itself. Although this is mostly true, the value being written is the address of the chunk that we are freeing! So, we do not fully control this value but we can manipulate it by freeing chunks at different spots on the heap.

This primitive is usually a step in the chain, not the full exploit itself. A common use case for a WRITE-WHERE primitive is to overwrite a maximum index value to a large value in order to induce a further out of bounds read/write issues. For example, in the House of Husk, a maximum index value is overwritten in order to overwrite a variety of values in LibC to eventually pop a shell. This works quite well but in certain situations we can do better!

The value being written is a heap memory pointer. Because this is a pointer, we can use this to overwrite another pointer to cause further corruption. For example, let's picture a string that has a size of 0x200. Then, we use this technique to overwrite a pointer to this string. Later on, we go to edit the string which NOW only has a heap pointer of only 0x70 bytes because of the overwrite. When this edit occurs, it creates a major buffer overflow on the 0x70 sized chunk because it is expects something of size 0x200. If the chunk above our freed chunk (next chunk) has important data in it, this could lead to major memory corruption.

Boom Goes the Dynamite (Free)

Figure 5 - Full Technique Setup

heap_info struct, setup a fake arena and have crafted the perfect chunk, all while avoiding some security checks. Figure 5 (shown above) has the full technique setup in a diagram, in order to make it easier to see. Now all we have to do is call free on our crafted chunk to cause the memory write to occur! We did all of the hardwork already and get to reap the fruit of that work.

Profit

Once the chunk has been written, you can now use this to cause even more corruption to eventually pop a shell! Have fun with this WRITE-WHERE primitive.

Pros & Requirements

This attack allows for a WRITE-WHERE primitive of a heap pointer. This attack has its positives and negatives though.

Pros

- Works on all versions of Malloc! The bulk of techniques are version locked.

- Only requires a single byte controlled value overflow of the size and a heap leak.

- Writes a large value to a nearly arbitrary location. This can be used as the start of deeper memory corruption, such as the Unsorted Bin Attack usage in the House of Orange.

- Writes a heap pointer, which could be used in a similar way to the Unsafe Unlink attack, to cause further memory corruption by overwriting pointers.

- If the vulnerability for corrupting the chunk can be triggered multiple times, it is possible to trigger the write as many times as you need! For the location to write to, the original arena pointer can be edited or a different offset of

MAX_HEAP_SIZEcan be used to set a new fake arena.

Requirements

Instead of a cons section, below is a list of requirements for this to work:

- Ability to corrupt fastbin sized chunk size field. A single byte overflow would suffice this condition.

- Known location to write to (fake arena location). This may require a leak.

- Ability to create a fake

heap_infostruct. This comes down to two things:- An attacker needs to know their relative location on the heap for proper placement of the fake

heap_infostructure, which likely requires a heap leak. This could potentially be sprayed if the attacker has good enough control of the data though. - An attacker must be able to allocate a significant amount of heap data to reach the proper offset for the fake

heap_infostruct.

- An attacker needs to know their relative location on the heap for proper placement of the fake

system_mem.system_mem value of the fake arena must be larger than the next chunk value, with a minimum value of 0x10. This is because of a security chunk of the size of a freed chunk.Future Work

Heap exploitation techniques are awesome, but vastly complicated. Because of this, I post fairly consistently about it and contribute to the how2heap repository whenever something interests me. I am in the process of developing a GLibC Malloc Heap Exploitation workshop to help teach this very complex subject. I am looking to start giving this workshop at conferences by the end of the year (2021). I have applied to single conference so far (looking at you DEFCON) but plan on applying to many other technical conferences to give the course. So far, the beta testing has gone extremely well and I have been happy with the participants understanding of the material after taking the course. So, keep an eye out for this course in the future :)

Conclusion

This went from the heart-pounding new discovery to a let me explain article. Regardless, I hope you enjoyed this and learned something interesting about heap exploitation and computers today. I am happy that this forgotten technique will now be more accessible to those in the binary exploitation world.

Feel free to reach out to me (contact information is in the footer) if you have any questions or comments about this article. Cheers from Maxwell "ꓘ" Dulin.